...

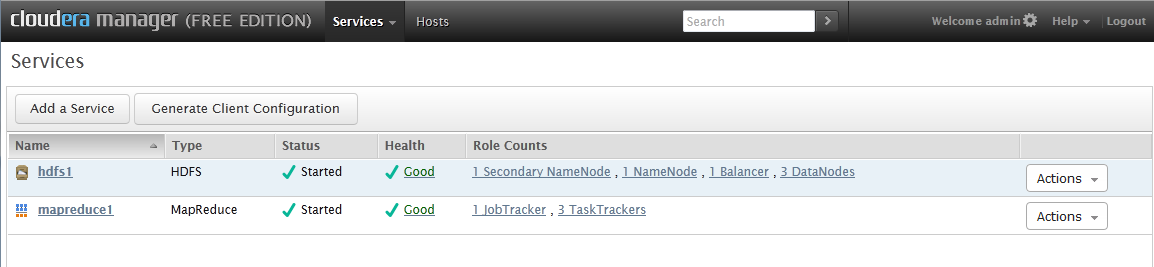

On the next screen select the basic install which includes Mapreduce, Hue, HDFS and follow the prompts. Once everything is installed you should come to a screen that looks like this:

There are some recommended configuration settings that Ikanow suggests changing before using the API server. These are optional (you can skip down to Generating Client Configuration if you want to get started with the default settings.

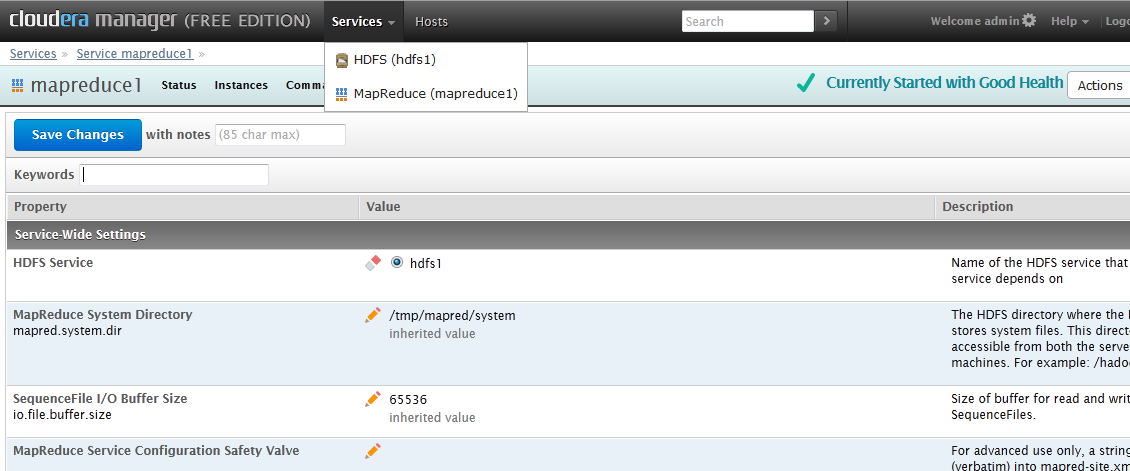

To change the mapreduce settings click on the mapreduce1 service and then click the configuration button at the top, you should come to a screen like this:

We recommend changing these items:

- Number of tasks to run per JVM (mapred.job.reuse.jvm.num.tasks) to -1

- Use Compression on Map Outputs (mapred.compress.map.output) to false (uncheck the box)

- Maximum Number of Simultaneous Map Tasks to 2

- Maximum Number of Simultaneous Reduce Tasks to 1

After you make these changes if you go back to the main screen (click the Services cookie at the top or the cloudera manager header in the top left).

Here we need to click the button Generate Client Configuration which will download a zip file. Open this zip file up and put the folder somewhere locally that you can find easily. The files in this folder need to be moved to the configuration folder on all the nodes. The configuration folder is by default set to /mnt/opt/hadoop-home/mapreduce/hadoop/

The files in the folder usually include: core-site.xml, hadoop-env.sh, hdfs-site.xml, log4j.properties, mapred-site.xml, README.txt, ssl-client.xml.example. Just transfer all these files into the folder.

Once you have completed these steps you should be able to schedule map reduce jobs via the API call: custom/mapreduce/schedulejob