Overview

The Plugin Manager GUI is not currently compatible with IE. It is compatible with Chrome, Firefox, and Safari.

The plugin manager provides a simple web-based user interface for uploading new and updated map reduce plugins and saved queries to the system, and for sharing them across the different communities.

If you want to use a map reduce plugin for many jobs, use the file uploader to upload the jar once and then use this plugin Manager to schedule each job that uses the common jar.

Logging In

The plugin manager can be accessed from <ROOT_URL>/manager/pluginManager.jsp (eg http://infinite.ikanow.com/manager/pluginManager.jsp). It can also be reached from the home page of the Manager webapp (itself linked from the main visualization GUI).

The following URL parameters are supported:

- Use "?sudo" to view jobs you don't own but which are shared with you (or all jobs if you are an admin), eg http://infinite.ikanow.com/pluginManager.jsp?sudo

This brings up username and password fields and a login button. (Unless already logged in, eg into the manager or main GUI - in which case skip to the next section).

Note that:

- The plugin manager shares its cookie with the main GUI, the file uploader, the source builder, and the person manager - logging into any of them will log into all of them.

Scheduling a new Map Reduce Plugin

The above figure shows the tool shortly after log-in.

To upload the plugin, simply fill in the fields, select the file from your hard drive and network drives, and select "Submit".

Note that you can select either a single or multiple (CTRL+click) communities. You can share plugins with any available community. If you upload to only your personal community, only you (and system administrators) will have access to the file.

The "query" field has a few noteworthy points:

- Some additional control fields can be supplied, as described here.

- The most common control fields can be specifically added with their default value by pressing the "Add Options" button

- The aforementioned query must be a MongoDB query (use the /wiki/spaces/INF/pages/4358642, or content format), or (from March 2014) Infinit.e query JSON objects (note this will usually be slower than indexed MongoDB queries so should be used with care)

- Press the "Check" button next to the "Query" field to validate the query JSON.

- (From March 2014) You can paste a saved workspace link into the query field (eg from the GUI) instead of typing out the JSON to generate an Infinit.e style query. You can include JSON below that to add query qualifiers, as described here.

Note: If you set append results to false, there is no need to set an age out.

Note: If you don't want your job to depend on another jobs completion, do not select any job depenencies (you can CTRL-click to remove selected options if necessary).

Note: the "user arguments" field can be any string, it is interpreted by the code in the Hadoop JAR. For custom plugin developers: see this tutorial for a description of how to incorporate user arguments in the code (under advanced topics). Since the user arguments will normally be JSON or javascript (see info box below), a "Check" button has been provided that will validate either of those 2 formats.

In particular, the built-in "HadoopJavascriptTemplate" template job uses the "user arguments" to hold the javascript code that gets executed in Hadoop.

Note: You can temporarily remove a combiner or reducer by putting "#" in front of it. Only the mapper is mandatory (others can be set to "none"), though normally at least the mapper and reducer are set.

Submit vs Quickrun

There are 2 options for submitting a job:

- Submit (button to the right of "Title")

- QuickRun (button to the right of "Frequency")

Submit will save the task (or update it if it already exists). If the frequency is not "Never" and the "Next Scheduled Time" is now or in the past, then job is immediately scheduled. The page refreshes immediately (unlike "QuickRun" below) and the progress can be monitored as described under "Following a job's progress" below.

"QuickRun" will set the frequency to "Once Only" and the time to "ASAP" (as soon as possible) and then will do 2 things:

- Submit as above

- It will wait for the job to complete before refreshing the page (all the "action" buttons are disabled in the meantime). You can't see the progress (see below) in the meantime, so this is best used on smaller jobs.

You can also debug tasks, this is described in the next section.

Debugging new and existing tasks

Just above "user arguments" there is a "Save and debug" button. This is very similar to pressing the "QuickRun" button described above, except:

- It will only run on the number of records specified in the text box next to the button.

- It will always run the Hadoop JAR in "local mode" (ie it won't be distributed to the Hadoop cluster, if one exists)

- Any log messages output by the Hadoop JAR (or in the javascript if running the prototype engine) are collected and output to the status message

- (Note that if running in local mode, then "QuickRun" will log error messages - nothing will be currently logged in the typical cluster mode though, so the debug mode is necessary in this case - the alternative is running and testing in eclipse as described here, which is quite involved)

Stopping a running task (kill)

Simply set the "Frequency" to "None" and re-submit. It may take up to a minute for the status to show the updated task status.

Scheduling a new Saved Query

If you want to schedule a saved query instead of a map reduce plugin you can follow the exact same process as scheduling a m/r job except for these few changes:

- Select the "query only" option for the JAR (select "re-use existing JAR" first)

- Leave Mapper, Combiner, and Reducer class blank

- Submit a valid query for the query field, a good place to start is saving queries from the GUI under the Advanced -> Save option. These can be pasted into the query or "User arguments" field. (Note unlike the normal map/reduce case, the query in this instance must be an Infinit.e query, not a MongoDB query).

(From March 2014) Note that you can paste a saved workspace link into the query field (eg from the GUI) instead of typing out the JSON.

Editing existing plugins/saved queries

After log-in, all plugins you own can be seen from the top drop-down menu (initially called "Upload New File"). You can also see all plugins in your community (see info box below). If you an administrator, all plugins in the system can be seen. You can only edit plugins if you admin/community moderator/owner though you can see the results of any plugin in the dropdown.

Apart from HadoopJavascriptTemplate, by default only shares you own are visible (this will be fixed in a future release), even as admin/moderator - to see all plugins you can access via the API, add "&sudo" to the URL (eg "http://localhost:8080/manager/pluginManager.jsp?sudo").

Once a file has been chosen, it can be modified by changing the fields (and/or choosing a different file), and then selecting the "Submit" button.

Copying an existing custom task

Select the task to be copied from the top drop down menu, then select "Copy Current Plugin". You must change the title, edit other fields are required.

Deleting files

Log in and choose a file as above, then select the "Delete" button.

Running an existing job

If you want to start a job you can select for the option "Next scheduled time" to Once Only and it will be scheduled as soon as possible and only run once. If you want to schedule a job on a certain frequency you can adjust the frequency option to one of the other settings.

Following a job's progress

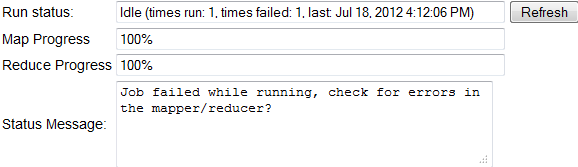

Once a job has been scheduled you will be able to track its progress by refreshing next to the run status, the current map and reduce completion status as well as any errors that may have occurred when running will be displayed in an informational header.