This guide is meant to guide and give suggestions as to how to setup and configure the Hadoop installation once you have completed the RPM installation instructions found here: Infinit.e-hadoop-installer.

| Info |

|---|

This section covers the install of CDH3, which is supported for v0.4-. For v0.5, install CDH5 - see Installing CDH5 (including upgrading from CDH3) |

Log in

This guide will start off from the point which you navigate to http://INSTALL_SERVER:7180 in your browser (ie INSTALL_SERVER is the node on which "sh install.sh full" was run):

...

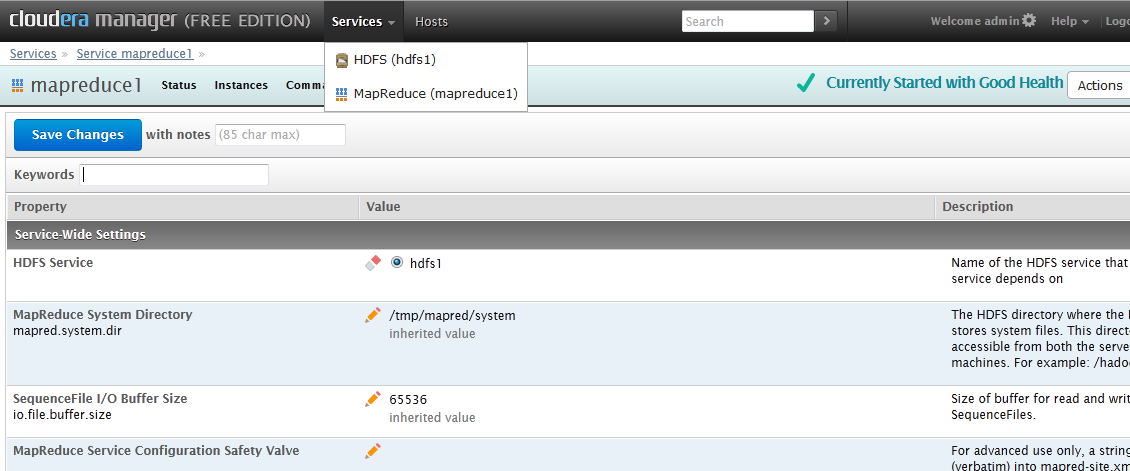

To change the mapreduce settings click on the mapreduce1 service and then click the configuration button at the top, you should come to a screen like this:

| Warning |

|---|

The following items must be changed for the full functionality of Infinit.e to be available:

|

We recommend changing these items:

- Number of tasks to run per JVM (mapred.job.reuse.jvm.num.tasks) to -1

- Use Compression on Map Outputs (mapred.compress.map.output) to false (uncheck the box)

- Maximum Number of Simultaneous Map Tasks to 2

- Maximum Number of Simultaneous Reduce Tasks to 1

- (On systems with large amount of RAM available: increase the size of "MapReduce Child Java Maximum Heap Size")

After making these changes, navigate to Instances (from the toolbar at the top of the page), the task trackers will show as having "outdated instances". Select them all and restart them.

...

The files in the folder usually include: core-site.xml, hadoop-env.sh, hdfs-site.xml, log4j.properties, mapred-site.xml, README.txt, ssl-client.xml.example. Just transfer all these files into the folder.

(Which parameters are taken from which files is somewhat involved and is discussed here)

Once you have completed these steps you should be able to schedule map reduce jobs via the API call: custom/mapreduce/schedulejob

...