...

This guide will start off from the point which you navigate to http://server:7180 in your browser:

The default login is admin/admin.

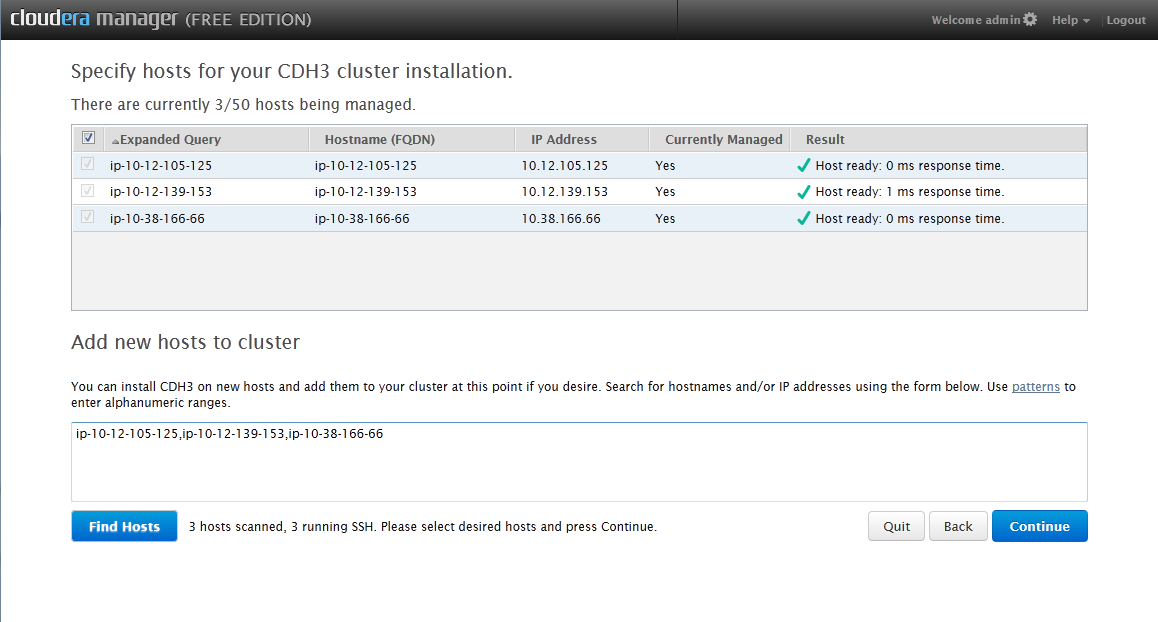

Once logged in skip the prompts to upgrade and register and you should come to a screen for adding hosts.

You can put a comma deliminated list of ip addresses or hostnames you want to be in your cluster and hit find hosts. You will be greeted with a list of the machines you can add to your hadoop cluster. Select each node you want and hit continue. (Your window will look slightly different to mine because I have already added these servers).

| Info |

|---|

For EC2 deployments, you can add the publicly addressable hostnames or the private hostnames or IPs (but not the publicly addressable IPs). This is because the internal IP addresses are used by the installer (but the public hostnames resolve to the internal IPs from within the Amazon cloud, where the installer runs) |

th

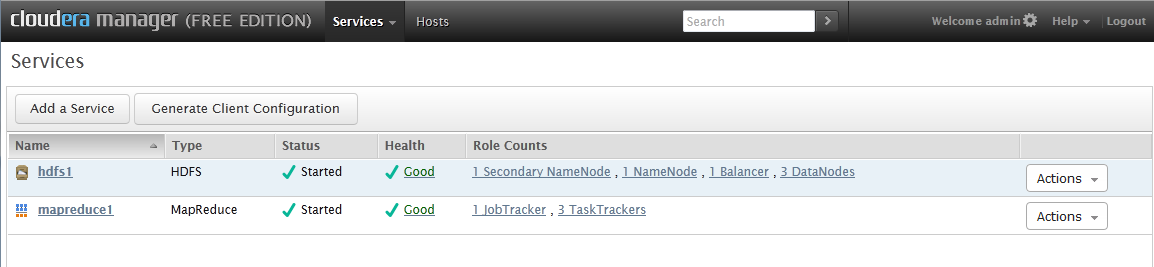

On the next screen select the basic install which includes Mapreduce, Hue, HDFS and follow the prompts. Once everything is installed you should come to a screen that looks like this:

There are some recommended configuration settings that Ikanow suggests changing before using the API server. These are optional (you can skip down to Generating Client Configuration if you want to get started with the default settings.

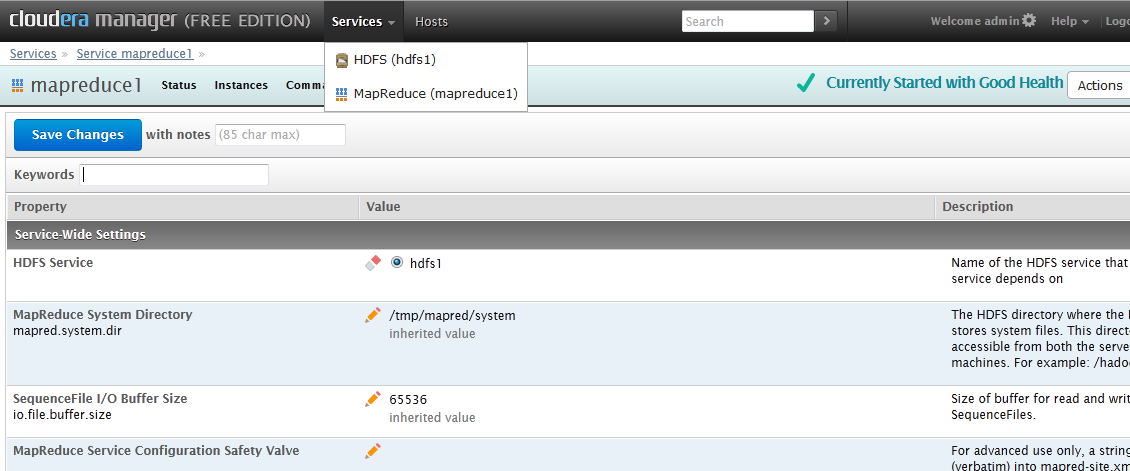

To change the mapreduce settings click on the mapreduce1 service and then click the configuration button at the top, you should come to a screen like this:

We recommend changing these items:

...

Once you have completed these steps you should be able to schedule map reduce jobs via the API call: custom/mapreduce/schedulejob